Addition Theorems on Probability

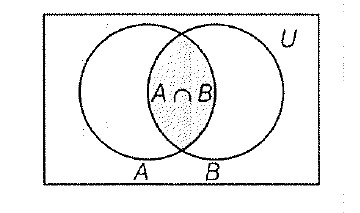

If `P(A +B)` or `P(A cup B)=` Probability of occurrence of atleast one event `A` or `B` and

`P(AB)` or `P(A cap B)=` Probability of happening of events `A` and `B` together, then

(i) When Events are Not Mutually Exclusive If `A` and `B` are two events which are not mutually exclusive,

then

`P(A cup B)= P(A) + P(B)- P(A cap B)`

or `P(A +B)= P(A) + P(B)- P(AB)`

For any three events `A, B, C,`

`P (A cup B cup C)= P(A) + P(B) + P(C)- P(A cap B)- P(B cap C)- P(C cap A)+ P(A cap B cap C)`

or `P(A + B +C)= P(A) + P(B) + P(C)- P(AB)- P(BC) - P(CA) + P(ABC)`

(ii) When Events are Mutually Exclusive - If `A` and `B` are mutually exclusive events, then

`n (A cap B)=0 => P(A cap B)=0`

`P(A cup B)= P(A) + P(B)`

For any three events `A, B, C` which are mutually exclusive,

`P(A cap B)= P(B cap C)= P(C cap A)`

`= P (A cap B cap C)= 0`

`:. P(A cup B cup C)= P(A) + P(B) + P(C)`

The probability of happening of anyone of several mutually exclusive events is equal to the sum of their probabilities, i.e. if `A_1, A_2 , ... , A_n` are mutually exclusive events, then

`P(A_1+A_2 +...+A_n) = P(A_1) +P(A_2)+...P(A_n)`

i.e., `P(sum A_i) = sum P(A_i)`

(iii) Some Other Theorems

(a) Let `A` and `B` be two events associated with a random experiment, then

`P(bar A cap B)= P(B)- P(A cap B)`

`P(A cap bar B)= P(A)- P(A cap B)`

(b) If `B subset A`, then `P(A cap bar B)= P(A)- P(B)`

`P(B) le P(A)`

Similarly, if `A subset B`, then `P(bar A cap B)= P(B) - P(A)`

`P(A) le P(B)`

NOTE : Probabiity of occurrence of neither `A` nor `B` is

`P(bar A cap bar B) = P(bar(A cup B)) = 1- P(A cup B)`

(iv) Generalisation of the Addition Theorem If `A_1, A_2 , ... , A_n` are n events associated with a random experiment, then

`P( cup_(i=1)^n A_i ) = sum_(i=1)^n P(A_i) - sum_(i,j =1)^n P(A_i cap A_j)+ sum_(i,j,k=1)^n P(A_i cap A_j cap A_k) + ....+ (-1)^(n-1) P(A_1 cap A_2 cap....cap A_n)`

If all the events `A_i = 1, 2, ... , n` are mutually exclusive, then `P(cup_(i=1)^n A)= sum_(i=1)^n (A_i)`

NOTE

`• P(bar A cap bar B) = 1- P(A cup B)`

`• P(bar A cup bar B = P(A cap B)+ P(A cap B)`

`• P(A) = P(A cap B)+ P(A cap bar B)`

`• P(B) = P(B cap A)+ P(B cap bar A)`

`P` (exactly one of `E_1,E_2` occurs)

`= P(E_1 cap E_2' ) + P(E_1' cap E_2)`

`= P(E_1)- P(E_1 cap E_2 ) + P(E_2) - P(E_1 cap E_2)`

`= P(E_1) + P(E_2 )- 2P(E_1 cap E_2)`

`• P` (neither `E_1 `nor `E_2` ) `= P(E_1' cap E_2') = 1- P(E_1 cup E_2)`

`• P(E_1' cup E_2') = 1- P(E_1 cap E_2)`

`P(AB)` or `P(A cap B)=` Probability of happening of events `A` and `B` together, then

(i) When Events are Not Mutually Exclusive If `A` and `B` are two events which are not mutually exclusive,

then

`P(A cup B)= P(A) + P(B)- P(A cap B)`

or `P(A +B)= P(A) + P(B)- P(AB)`

For any three events `A, B, C,`

`P (A cup B cup C)= P(A) + P(B) + P(C)- P(A cap B)- P(B cap C)- P(C cap A)+ P(A cap B cap C)`

or `P(A + B +C)= P(A) + P(B) + P(C)- P(AB)- P(BC) - P(CA) + P(ABC)`

(ii) When Events are Mutually Exclusive - If `A` and `B` are mutually exclusive events, then

`n (A cap B)=0 => P(A cap B)=0`

`P(A cup B)= P(A) + P(B)`

For any three events `A, B, C` which are mutually exclusive,

`P(A cap B)= P(B cap C)= P(C cap A)`

`= P (A cap B cap C)= 0`

`:. P(A cup B cup C)= P(A) + P(B) + P(C)`

The probability of happening of anyone of several mutually exclusive events is equal to the sum of their probabilities, i.e. if `A_1, A_2 , ... , A_n` are mutually exclusive events, then

`P(A_1+A_2 +...+A_n) = P(A_1) +P(A_2)+...P(A_n)`

i.e., `P(sum A_i) = sum P(A_i)`

(iii) Some Other Theorems

(a) Let `A` and `B` be two events associated with a random experiment, then

`P(bar A cap B)= P(B)- P(A cap B)`

`P(A cap bar B)= P(A)- P(A cap B)`

(b) If `B subset A`, then `P(A cap bar B)= P(A)- P(B)`

`P(B) le P(A)`

Similarly, if `A subset B`, then `P(bar A cap B)= P(B) - P(A)`

`P(A) le P(B)`

NOTE : Probabiity of occurrence of neither `A` nor `B` is

`P(bar A cap bar B) = P(bar(A cup B)) = 1- P(A cup B)`

(iv) Generalisation of the Addition Theorem If `A_1, A_2 , ... , A_n` are n events associated with a random experiment, then

`P( cup_(i=1)^n A_i ) = sum_(i=1)^n P(A_i) - sum_(i,j =1)^n P(A_i cap A_j)+ sum_(i,j,k=1)^n P(A_i cap A_j cap A_k) + ....+ (-1)^(n-1) P(A_1 cap A_2 cap....cap A_n)`

If all the events `A_i = 1, 2, ... , n` are mutually exclusive, then `P(cup_(i=1)^n A)= sum_(i=1)^n (A_i)`

NOTE

`• P(bar A cap bar B) = 1- P(A cup B)`

`• P(bar A cup bar B = P(A cap B)+ P(A cap B)`

`• P(A) = P(A cap B)+ P(A cap bar B)`

`• P(B) = P(B cap A)+ P(B cap bar A)`

`P` (exactly one of `E_1,E_2` occurs)

`= P(E_1 cap E_2' ) + P(E_1' cap E_2)`

`= P(E_1)- P(E_1 cap E_2 ) + P(E_2) - P(E_1 cap E_2)`

`= P(E_1) + P(E_2 )- 2P(E_1 cap E_2)`

`• P` (neither `E_1 `nor `E_2` ) `= P(E_1' cap E_2') = 1- P(E_1 cup E_2)`

`• P(E_1' cup E_2') = 1- P(E_1 cap E_2)`