(i) `-1 le r (x,y) le 1`

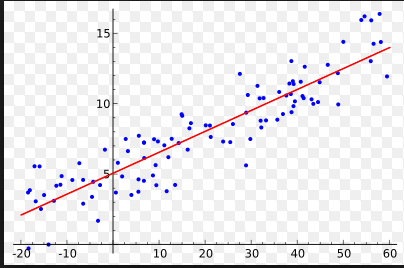

(ii) If `r = 1`, then the coefficient of correlation is perfectly positive.

(iii) If `r =- 1`, the correlation is perfectly negative.

(iv) The correlation coefficient is a pure number independent of the unit of measurement.

(v) The coefficient of correlation is independent of the change in origin and scale.

(vi) If `-1 < r < 1`, it indicates the degree of linear relationship between `x` and `y`, where as its sign tells about the direction of relationship.

(vii) If `x` and `y` are two independent variables, `r = 0`.

(viii) If `r = 0, x` and `y` are said to be uncorrelated. It does not imply that the two variates are independent. `r (x, y)=0`

(ix) If `x` and `y` are random variables and `a, b, c` and `d` are any numbers such that `a =ne 0, c ne 0`, then

`r(ax+b, cy +d)=(|ac|)/(ac) r (x,y)`

(i) `-1 le r (x,y) le 1`

(ii) If `r = 1`, then the coefficient of correlation is perfectly positive.

(iii) If `r =- 1`, the correlation is perfectly negative.

(iv) The correlation coefficient is a pure number independent of the unit of measurement.

(v) The coefficient of correlation is independent of the change in origin and scale.

(vi) If `-1 < r < 1`, it indicates the degree of linear relationship between `x` and `y`, where as its sign tells about the direction of relationship.

(vii) If `x` and `y` are two independent variables, `r = 0`.

(viii) If `r = 0, x` and `y` are said to be uncorrelated. It does not imply that the two variates are independent. `r (x, y)=0`

(ix) If `x` and `y` are random variables and `a, b, c` and `d` are any numbers such that `a =ne 0, c ne 0`, then

`r(ax+b, cy +d)=(|ac|)/(ac) r (x,y)`